Prototype

Translated Subtitles in Real-Time

What if…

we could display translated subtitles on any live-video-feed?

Problem

The feature of translated subtitles on videos in foreign languages is quit old. Those subtitles are usually curated and edited in post-production. in a live-event, like a conference or a video-call, curated translations are impossible or at least very hard to accomplish because in the past this was done manually.

A feature which already exists in applications like MS Teams is real-time subtitling of the spoken words in the original language. This is nice but does not help us translating the content.

Solution

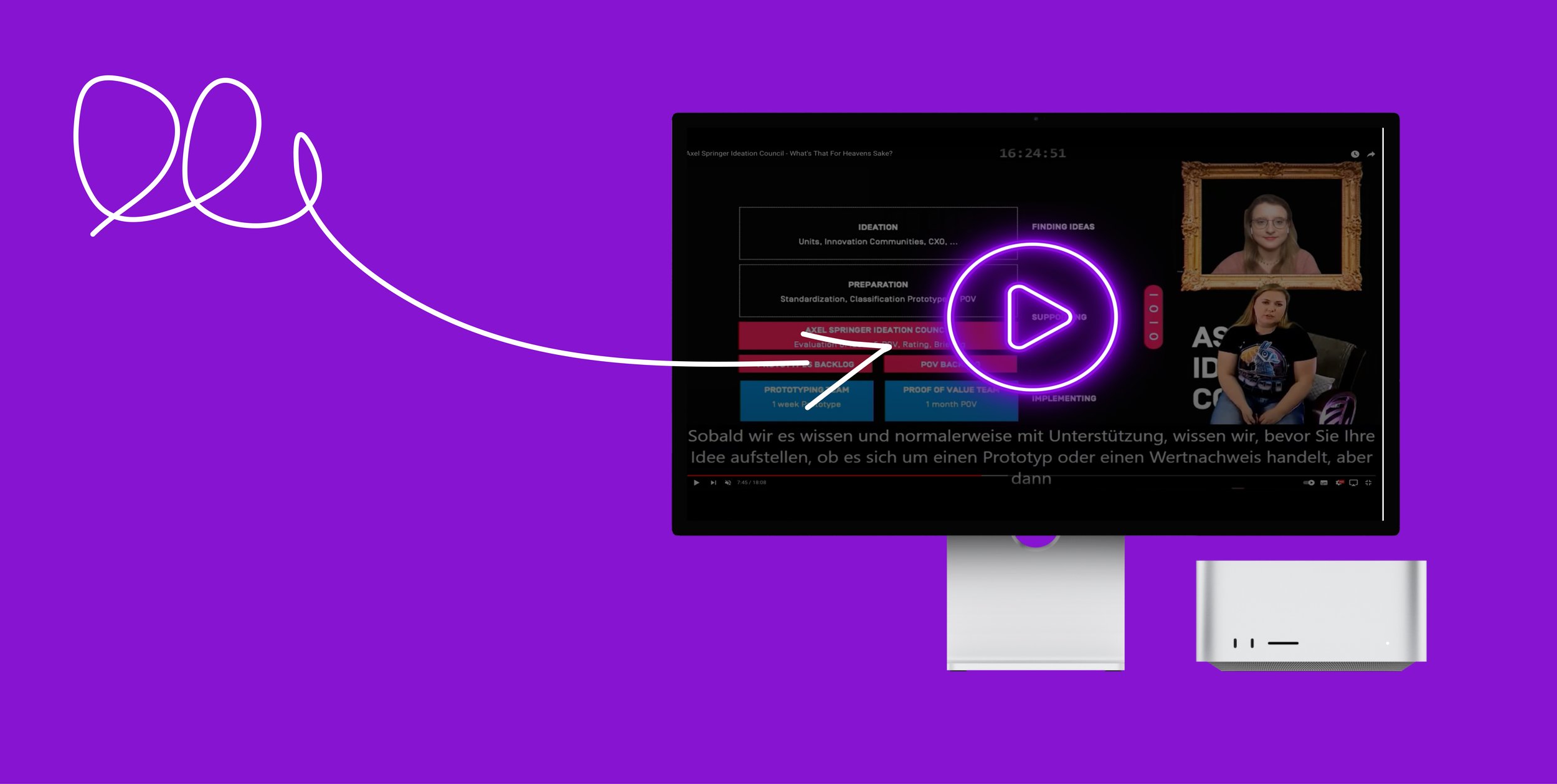

We tested the new Google Media Translation API with the goal of converting an audio stream from a microphone into translated text in high quality with minimum delay. For displaying the subtitles we used OBS with a transparent overlay so that the viewer can see the original video, enriched with the generated subtitles.

Challenges

The basic construct of this prototype was straight forward and worked without major impediments. There were some small problems getting the Sox library to work on some of our test-systems and also handling of multiple microphones failed in some tests. In the end, we created a setup where we mixed all audio streams before processing and simulated a single microphone. In a production setup this must of course be handled differently. An important improvement for videos with multiple speakers will be to visualize who is speaking at the given time.

The Team

idea by Tarek Madany Mamlouk